How To Avoid 7 Fatal Mistakes For Your Blog In Google Webmaster Tools

|

| 7-fatal-mistakes-to-avoid-in-webmaster-tools |

Google Webmaster is an unique piece of tools that allows the webmasters to optimize their blogs and websites and offers a host of technical information about it.

It tells the webmasters how their sites are performing, what types of traffic the sites are receiving, geographic location of the traffic and a lot more.

Besides, it also helps the webmasters about other important issues such as - crawler issues, security issues, broken links and many more.

However, the most important aspect of webmaster tools is in respect of 'search engine optimization(SEO)'. Tracking and optimizing the website's performance is of high importance to draw organic traffic from search engines.

By optimizing the above issues a website can rank high and start receiving a lot of organic traffic from the search engines.

- To open a Webmaster tools account go to www.google.com/webmasters/tools and sign in with your Google ID and PW.

- Click 'ADD A SITE'

- Verify your site

To know more about how to add your site and verify it read 'Add Your Blog/Website To

Google Webmaster Tools'

It needs a lot of caution and knowledge to optimize the advanced features of webmaster as it can seriously affect SEO campaign of the website and the crawler may even stop indexing it.

In this article, it has been explained (with screen shots) how wrong use of '7 Basic Features' of search console may ruin your blog completely and how to avoid them.

1) Sitemap Submission

A sitemap enables the Google bot to know the contents of the website and index it faster. In spite of it being an amazing feature, sometimes it brings a few problems with it.

It has been observed, sometimes xml(Extensible Markup Language) sitemap also appears in google search result pages(SERP) and it makes your site to be of low quality in the eyes of your competitors and viewers.

To avoid this issue one has to make sure to submit to the console only '.gz version'(short of GZip or the compression format in this case) of sitemaps. So, next time when you submit a sitemap to the google console, make sure it's of '.gz version'. Needless to say, submitting a '.gz version' of sitemap requires some technical knowledge.

The second problem appears when a webmaster specially the new ones, attempts to force the google bot to index a large number of pages or articles very fast by submitting a lot of xml sitemaps frequently. This type of attempt immediately alerts the 'google panda' and it may even flag the concerned website as a spam one and even may stop indexing it further.

So, next time when you submit your sitemap to 'webmaster tools', be careful about the sitemap files and never attempt to submit too many sitemaps frequently and also never attempt to 'fetch' the various posts/articles off and on.

|

| add-test-sitemap |

2) Geographic Targeting

Optimization of your blog in respect of geo targeting is indispensable for conversion. Geo targeting is active response to a specific geo location. This feature enables Google to serve country/location specific ads in your blog, based on the IP address of the viewers.

All the ads are served that are relevant to language, custom, query of the targeted country or location, thus driving more conversion. The feature is highly effective if your blog has a generic top level domain name such as .com, .net. .org, .in or .co while it is set as default if it has a country specific TLD.

If your blog is set to point to a particular country or region, a significant boost in ranking may be achieved in respect of that country or region.

However, it must be remembered that it is done only at the cost of lowering your google rankings in respect of other 'google country versions'.

For example if your site points to United States, your google ranking may improve significantly for that country but you are bound to loose rankings in respect of other countries or regions of the world.

So, better not to use this feature and keep it as 'Unlisted' if your site aims to all viewers of the world irrespective of country or region.

|

| geo-targeting-of-blog |

3) Crawl Rate

The term crawl rate means how many requests per second Google bot makes to the site when it is crawling it - say, 10 requests per second.

How many times Google bot will crawl your site cannot be changed. However, if you want Google bot to index your new or updated content - you may use 'Fetch as Google' feature instead.

Google is equipped with advanced and smart 'Algorithms Features' which enables it to crawl as many posts of your site as possible on each visit.

Disturbing the default crawl set by Google is not recommended.

In the Webmaster Tools dashboard click crawl > crawl rate, shown in read in the screenshot below, you will find a crawl statement of your site easily.

|

| crawl-rate-statement-google |

4) Crawler Access

This features gives you the opportunity of instructing the crawler about which posts and pages of your blog to be indexed and which left untouched. It also enables you to generate a robots.txt file or removal of a specific URL.

Using this feature incorrectly is also liable to heavily affect your blog in terms of SEO campaign.

So, do not disturb this feature unless you are quite confident and familiar with its use.

|

| robots.txt-file |

If you want to find out if any specific post of your blog is accessible by Google bot, then just enter the url of that post in the column at the bottom as shown in the above screenshot and click 'Test'. It will appear as 'ALLOWED' in green as shown in the following screenshot.

|

| google-webmaster-tools |

If you want to find out if your site has any crawl error and Google bot cannot crawl that specific post, then just click 'Crawl Errors' in red as shown in the following screenshot and rectify it accordingly.

|

| crawl-errors-webmaster-tools |

5) Sitelinks

Sitelinks are links in the interior pages of the site which are generated by Google automatically. These links appear in the SERPs(Search Engine Result Pages) to help viewers navigate the sites easier.

These links are algorithmically calculated based on the link structure and anchor text of the sites and are an important element to attract viewers's attention.

If the structure of the site doesn't allow algorithms to find good sitelinks, then the sitelinks of your site are taken to be irrelevant of the user's query and is not shown in SERP (search engine result pages).

Sitelinks are generated automatically and being constantly improved using algorithms and nothing much can be done about it. It almost depends entirely on Google which sitelinks are to follow.

However, to improve the quality of sitelinks, there are some best practices to follow. Make sure to use anchor text and alt text that is compact, informative and avoids repetition.

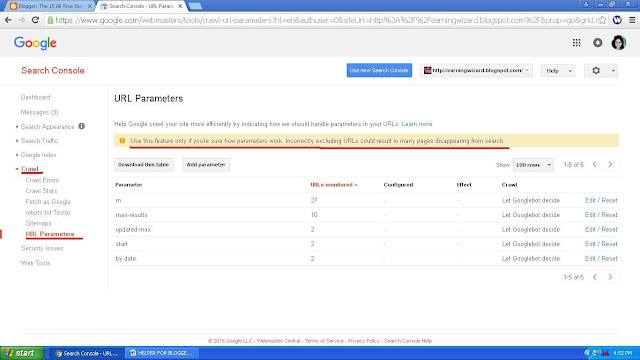

6) URL Parameter Setting

This unique feature is designed for webmasters who want to help Google to crawl their sites more effectively.

Setting url parameters are a powerful tool in the hands of only competent and intelligent webmasters. By setting it correctly, one can vastly improve the site's indexing by Google bot efficiently.

On the other hand by setting it incorrectly, may result in ignoring of important pages and articles of your site by Google. This is a very tricky issue and mishandling of which may lead to havoc negative results in SEO effort.

After crawling the entire site along with the external links, Google shows you the most popular URLs parameters.

You have the option to select 'Ignore' 'Not Ignore' or 'Let Google Decide' against each of these parameters. These parameters spread over a wide range of criteria such as Product Ids to Session Ids and from Google 'Analytic parameter' to 'page navigation variables' and may sometime even lead to 'duplicate content issues'.

It requires a lot of expertise, knowledge and experience to set these parameters correctly. Any incorrect use of this feature may throw your contents completely out of SEO purview and may prove to be fatal for your blog.

So, use this tool only if you are sure that you know how parameters work.

|

| url-parameters-setting |

7) Change of Address

This feature is applicable to custom domains only and not to sub domains. This feature enables the admins of custom domains to transfer websites from the present one to a new one.

Redirecting old pages to the new one using 301 redirection and mapping demands a lot of knowledge but at the same time extremely important too.

No comments